Eigenvalues and Eigenvectors

YouTube lecture recording from October 2020

The following YouTube video was recorded for the 2020 iteration of the course.

The material is still very similar:

Using Python to calculate the inverse

A = ( 3 0 2 3 0 − 3 0 1 1 ) A = \begin{pmatrix}3&0&2\\ 3&0&-3\\ 0&1&1\end{pmatrix} A = 3 3 0 0 0 1 2 − 3 1 NumPy

Copy import numpy as np

A = np . array ( [ [ 3 , 0 , 2 ] , [ 3 , 0 , - 3 ] , [ 0 , 1 , 1 ] ] )

np . linalg . inv ( A )

Copy array([[ 0.2 , 0.13333333, 0. ],

[-0.2 , 0.2 , 1. ],

[ 0.2 , -0.2 , -0. ]])

SymPy

Copy import sympy as sp

A = sp . Matrix ( [ [ 3 , 0 , 2 ] , [ 3 , 0 , - 3 ] , [ 0 , 1 , 1 ] ] )

A . inv ( )

[ 1 5 2 15 0 − 1 5 1 5 1 1 5 − 1 5 0 ] \displaystyle \left[\begin{matrix}\frac{1}{5} & \frac{2}{15} & 0\\- \frac{1}{5} & \frac{1}{5} & 1\\\frac{1}{5} & - \frac{1}{5} & 0\end{matrix}\right] 5 1 − 5 1 5 1 15 2 5 1 − 5 1 0 1 0

It doesn't always work

Consider the following system

e q 1 : x + y + z = a e q 2 : 2 x + 5 y + 2 z = b e q 3 : 7 x + 10 y + 7 z = c \begin{array}{cccccccc}

eq1: & x & + & y & + & z & = & a \\

eq2: & 2x & + & 5y & + & 2z & = & b \\

eq3: & 7x & +& 10y & + & 7z & = & c

\end{array} e q 1 : e q 2 : e q 3 : x 2 x 7 x + + + y 5 y 10 y + + + z 2 z 7 z = = = a b c Copy A = np . array ( [ [ 1 , 1 , 1 ] , [ 2 , 5 , 2 ] , [ 7 , 10 , 7 ] ] )

np . linalg . inv ( A )

Copy ---------------------------------------------------------------------------

LinAlgError Traceback (most recent call last)

Cell In[5], line 2

1 A = np.array([[1, 1, 1], [2, 5, 2], [7, 10, 7]])

----> 2 np.linalg.inv(A)

File ~/GitRepos/gutenberg-material/DtcMathsStats2018/venv2/lib/python3.10/site-packages/numpy/linalg/linalg.py:561, in inv(a)

559 signature = 'D->D' if isComplexType(t) else 'd->d'

560 extobj = get_linalg_error_extobj(_raise_linalgerror_singular)

--> 561 ainv = _umath_linalg.inv(a, signature=signature, extobj=extobj)

562 return wrap(ainv.astype(result_t, copy=False))

File ~/GitRepos/gutenberg-material/DtcMathsStats2018/venv2/lib/python3.10/site-packages/numpy/linalg/linalg.py:112, in _raise_linalgerror_singular(err, flag)

111 def _raise_linalgerror_singular(err, flag):

--> 112 raise LinAlgError("Singular matrix")

LinAlgError: Singular matrix

Singular matrices

The rank of an n × n \;n\,\times\,n\; n × n A \;A\; A A \;A\; A

When rank ( A ) < n \;\text{rank}(A) < n\; rank ( A ) < n

The system A x = b \;A\textbf{x} = \textbf{b}\; A x = b fewer equations than unknowns

The matrix is said to be singular

The determinant of A \;A\; A

The equation A u = 0 \;A\textbf{u} = \textbf{0}\; A u = 0 u ≠ 0 \textbf{u} \neq \textbf{0} u = 0

Singular matrix example

An under-determined system (fewer equations than unknowns) may mean that there are many solutions or that there are no solutions .

An example with many solutions is

x + y = 1 , 2 x + 2 y = 2 , \begin{align*}

x+y &=& 1,\\

2x+2y &=& 2,

\end{align*} x + y 2 x + 2 y = = 1 , 2 , has infinitely many solutions (x=0, y=1; x=-89.3, y=90.3...)

An example with no solutions is

x + y = 1 , 2 x + 2 y = 0 , \begin{align*}

x+y &=& 1,\\

2x+2y &=& 0,

\end{align*} x + y 2 x + 2 y = = 1 , 0 , where the second equation is inconsistent with the first.

These examples use the matrix

( 1 1 2 2 ) , \begin{pmatrix}1&1\\ 2&2\end{pmatrix}, ( 1 2 1 2 ) ,

Null space

When a matrix is singular we can find non-trivial solutions to A u = 0 \;A\textbf{u} = \textbf{0} A u = 0

These are vectors which form the null space for A \;A A

Any vector in the null space makes no difference to the effect that A A A

A ( x + u ) = A x + A u = A x + 0 = A x . \displaystyle A(\textbf{x} + \textbf{u}) = A\textbf{x} + A\textbf{u} = A\textbf{x} + \textbf{0} = A\textbf{x}. A ( x + u ) = A x + A u = A x + 0 = A x .

Note that any combination or scaling of vectors in the null space is also in the null space.

That is, if A u = 0 \;A\textbf{u} = \textbf{0}\; A u = 0 A v = 0 \;A\textbf{v} = \textbf{0}\; A v = 0

A ( α u + β v ) = 0 \displaystyle A(\alpha\textbf{u} + \beta\textbf{v}) = \textbf{0} A ( α u + β v ) = 0

The number of linearly independent vectors in the null space is denoted null ( A ) ~\text{null}(A)~ null ( A )

null ( A ) + rank ( A ) = order ( A ) . \text{null}(A) + \text{rank}(A) = \text{order}(A). null ( A ) + rank ( A ) = order ( A ) .

Null space example

Previous example of a singular system:

A = ( 1 1 1 2 5 2 7 10 7 ) \displaystyle A = \left(\begin{matrix} 1& 1& 1\\ 2& 5& 2\\ 7& 10&7 \end{matrix}\right) A = 1 2 7 1 5 10 1 2 7

Copy A = np . array ( [ [ 1 , 1 , 1 ] , [ 2 , 5 , 2 ] , [ 7 , 10 , 7 ] ] )

np . linalg . matrix_rank ( A )

Copy import scipy . linalg

scipy . linalg . null_space ( A )

Copy array([[-7.07106781e-01],

[-1.11022302e-16],

[ 7.07106781e-01]])

remember, scaled vectors in the null space are also in the null space, for example, x = 1 , y = 0 , z = − 1 \;x=1, y=0, z=-1\; x = 1 , y = 0 , z = − 1

( 1 1 1 2 5 2 7 11 7 ) ( − 1000 0 1000 ) = ? \left(\begin{matrix} 1& 1& 1\\ 2& 5& 2\\ 7& 11&7 \end{matrix}\right) \left(\begin{matrix} -1000\\ 0 \\ 1000 \end{matrix}\right) = \quad ? 1 2 7 1 5 11 1 2 7 − 1000 0 1000 = ?

Copy np . matmul ( A , np . array ( [ - 1000 , 0 , 1000 ] ) )

Eigenvectors: motivation

The eigenvalues and eigenvectors give an indication of how much effect the matrix has, and in what direction.

A = ( cos ( 45 ) − sin ( 45 ) sin ( 45 ) cos ( 45 ) ) has no scaling effect. \displaystyle A=\left(\begin{matrix} \cos(45)&-\sin(45)\\ \sin(45)&\cos(45)\\\end{matrix}\right)\qquad\text{has no scaling effect.} A = ( cos ( 45 ) sin ( 45 ) − sin ( 45 ) cos ( 45 ) ) has no scaling effect. B = ( 2 0 0 1 2 ) doubles in x , but halves in y . \displaystyle B=\left(\begin{matrix} 2& 0 \\ 0&\frac{1}{2}\\\end{matrix}\right)\qquad\qquad\text{doubles in }x\text{, but halves in }y\text{.} B = ( 2 0 0 2 1 ) doubles in x , but halves in y .

Repeated applications of A \;A\; A B \;B\; B ( ∞ , 0 ) . \;(\infty, 0). ( ∞ , 0 ) .

Transitions with probability

Solution of systems of linear ODEs

Stability of systems of nonlinear ODEs

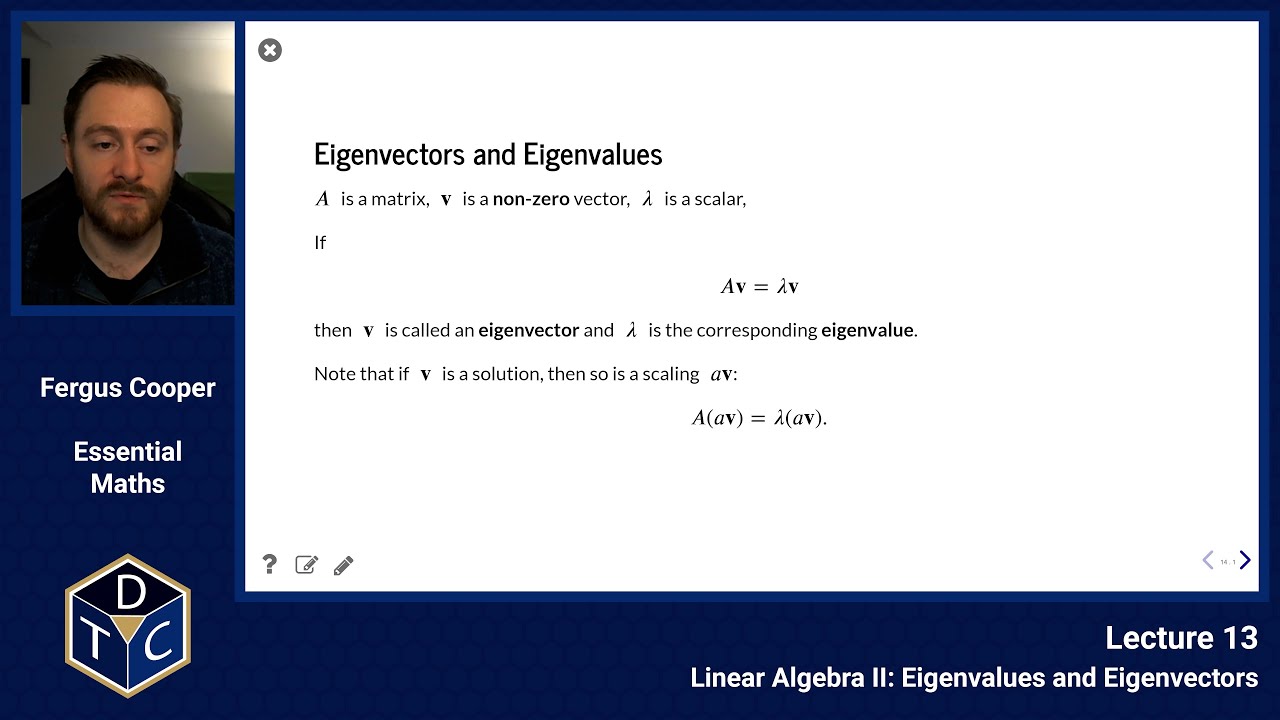

Eigenvectors and Eigenvalues

A A\; A v \;\textbf{v}\; v non-zero vector, λ \;\lambda\; λ

A v = λ v \displaystyle A \textbf{v} = \lambda \textbf{v} A v = λ v

then v \;\textbf{v}\; v eigenvector and λ \;\lambda\; λ eigenvalue .

Note that if v \;\textbf{v}\; v a v \;a\textbf{v} a v

A ( a v ) = λ ( a v ) . \displaystyle A (a \textbf{v}) = \lambda (a \textbf{v}). A ( a v ) = λ ( a v ) .

Finding Eigenvalues

Another way to write previous equation:

A v = λ v , A v − λ I v = 0 , ( A − λ I ) v = 0 . \begin{align*}

A \textbf{v} &=& \lambda \textbf{v},\\

A \textbf{v} - \lambda I \textbf{v}&=& \textbf{0},\\

(A - \lambda I) \textbf{v}&=& \textbf{0}.

\end{align*} A v A v − λ I v ( A − λ I ) v = = = λ v , 0 , 0 .

B x = 0 \displaystyle B\textbf{x}=\textbf{0} B x = 0

where B \;B\; B x \;\textbf{x}\; x

Assume B \;B\; B

B − 1 ( B x ) = B − 1 0 = 0 \displaystyle B^{-1}(B\textbf{x})=B^{-1}\textbf{0}=\textbf{0} B − 1 ( B x ) = B − 1 0 = 0

B − 1 ( B x ) = ( B − 1 B ) x = I x = x \displaystyle B^{-1}(B\textbf{x})=(B^{-1}B)\textbf{x}=I\textbf{x}=\textbf{x} B − 1 ( B x ) = ( B − 1 B ) x = I x = x

B − 1 ( B x ) = 0 = x \displaystyle B^{-1}(B\textbf{x})=\textbf{0}=\textbf{x} B − 1 ( B x ) = 0 = x

but we stated above that x ≠ 0 \;\textbf{x}\neq\textbf{0}\; x = 0 B \;B\; B B \;B\; B

( A − λ I ) v = 0 with v ≠ 0 , \displaystyle (A-\lambda I)\textbf{v} = \textbf{0} \quad \text{with} \quad \textbf{v}\neq \textbf{0}, ( A − λ I ) v = 0 with v = 0 ,

so ( A − λ I ) \displaystyle (A-\lambda I) ( A − λ I )

∣ A − λ I ∣ = 0. \displaystyle |A-\lambda I|=0. ∣ A − λ I ∣ = 0.

Example

What are the eigenvalues for this matrix?

A = ( − 2 − 2 1 − 5 ) \displaystyle A=\left(\begin{matrix}-2&-2\\ 1&-5\\\end{matrix}\right) A = ( − 2 1 − 2 − 5 ) ∣ A − λ I ∣ = ∣ − 2 − λ − 2 1 − 5 − λ ∣ = ( − 2 − λ ) ( − 5 − λ ) − ( − 2 ) \displaystyle |A-\lambda I|=\left\vert\begin{matrix}-2-\lambda&-2\\ 1&-5-\lambda\end{matrix}\right\vert=(-2-\lambda)(-5-\lambda)-(-2) ∣ A − λ I ∣ = − 2 − λ 1 − 2 − 5 − λ = ( − 2 − λ ) ( − 5 − λ ) − ( − 2 ) = 10 + 5 λ + λ 2 + 2 λ + 2 = λ 2 + 7 λ + 12 = ( λ + 3 ) ( λ + 4 ) = 0 \displaystyle =10+5\lambda+\lambda^2+2\lambda+2=\lambda^2+7\lambda+12=(\lambda+3)(\lambda+4)=0 = 10 + 5 λ + λ 2 + 2 λ + 2 = λ 2 + 7 λ + 12 = ( λ + 3 ) ( λ + 4 ) = 0

So the eigenvalues are λ 1 = − 3 \lambda_1=-3 λ 1 = − 3 λ 2 = − 4 \lambda_2=-4 λ 2 = − 4

Eigenvalues using Python

Copy A = np . array ( [ [ - 2 , - 2 ] , [ 1 , - 5 ] ] )

np . linalg . eig ( A ) [ 0 ]

Copy A2 = sp . Matrix ( [ [ - 2 , - 2 ] , [ 1 , - 5 ] ] )

A2 . eigenvals ( )

{ − 4 : 1 , − 3 : 1 } \displaystyle \left\{ -4 : 1, \ -3 : 1\right\} { − 4 : 1 , − 3 : 1 }

Finding Eigenvectors

For an eigenvalue, the corresponding vector comes from substitution into A v = λ v \;A \textbf{v} = \lambda \textbf{v} A v = λ v

Example

What are the eigenvectors for

A = ( − 2 − 2 1 − 5 ) ? \displaystyle A=\left(\begin{matrix}-2&-2\\ 1&-5\\\end{matrix}\right)? A = ( − 2 1 − 2 − 5 ) ?

The eigenvalues are λ 1 = − 3 \;\lambda_1=-3\; λ 1 = − 3 λ 2 = − 4. \;\lambda_2=-4.\; λ 2 = − 4. v 1 \;\textbf{v}_1\; v 1 v 2 \;\textbf{v}_2\; v 2

A v 1 = λ 1 v 1 . \displaystyle A\textbf{v}_1=\lambda_1 \textbf{v}_1. A v 1 = λ 1 v 1 . ( − 2 − 2 1 − 5 ) ( u 1 v 1 ) = ( − 3 u 1 − 3 v 1 ) \displaystyle \left(\begin{matrix}-2&-2\\ 1&-5\\\end{matrix}\right) \left(\begin{matrix}u_1\\ v_1\\\end{matrix}\right) = \left(\begin{matrix}-3u_1\\ -3v_1\\\end{matrix}\right) ( − 2 1 − 2 − 5 ) ( u 1 v 1 ) = ( − 3 u 1 − 3 v 1 ) u 1 = 2 v 1 . (from the top or bottom equation) \displaystyle u_1 = 2v_1. \text{ (from the top or bottom equation)} u 1 = 2 v 1 . (from the top or bottom equation) ( u 1 v 1 ) = ( 2 1 ) , ( 1 0.5 ) , ( − 4.4 − 2.2 ) , ( 2 α α ) … \displaystyle \left(\begin{matrix}u_1\\ v_1\\\end{matrix}\right) = \left(\begin{matrix}2 \\ 1\\\end{matrix}\right), \left(\begin{matrix}1 \\ 0.5\\\end{matrix}\right), \left(\begin{matrix}-4.4 \\ -2.2\\\end{matrix}\right), \left(\begin{matrix}2\alpha \\ \alpha\\\end{matrix}\right)\ldots ( u 1 v 1 ) = ( 2 1 ) , ( 1 0.5 ) , ( − 4.4 − 2.2 ) , ( 2 α α ) …

Eigenvectors in Python

Copy A = np . array ( [ [ - 2 , - 2 ] , [ 1 , - 5 ] ] )

np . linalg . eig ( A ) [ 1 ]

Copy array([[0.89442719, 0.70710678],

[0.4472136 , 0.70710678]])

Copy c = sp . symbols ( 'c' )

A2 = sp . Matrix ( [ [ - 2 , c ] , [ 1 , - 5 ] ] )

A2 . eigenvects ( )

[ ( − 4 c + 9 2 − 7 2 , 1 , [ [ 3 2 − 4 c + 9 2 1 ] ] ) , ( 4 c + 9 2 − 7 2 , 1 , [ [ 4 c + 9 2 + 3 2 1 ] ] ) ] \displaystyle \left[ \left( - \frac{\sqrt{4 c + 9}}{2} - \frac{7}{2}, \ 1, \ \left[ \left[\begin{matrix}\frac{3}{2} - \frac{\sqrt{4 c + 9}}{2}\\1\end{matrix}\right]\right]\right), \ \left( \frac{\sqrt{4 c + 9}}{2} - \frac{7}{2}, \ 1, \ \left[ \left[\begin{matrix}\frac{\sqrt{4 c + 9}}{2} + \frac{3}{2}\\1\end{matrix}\right]\right]\right)\right] [ ( − 2 4 c + 9 − 2 7 , 1 , [ [ 2 3 − 2 4 c + 9 1 ] ] ) , ( 2 4 c + 9 − 2 7 , 1 , [ [ 2 4 c + 9 + 2 3 1 ] ] ) ]

Diagonalising matrices

Any nonsingular matrix A A A

If A \;A\; A λ 1 \;\lambda_1\; λ 1 λ 2 \;\lambda_2\; λ 2 ( u 1 v 1 ) \;\left(\begin{matrix}u_1\\ v_1\\\end{matrix}\right)\; ( u 1 v 1 )

( u 2 v 2 ) \displaystyle \left(\begin{matrix}u_2\\ v_2\\\end{matrix}\right) ( u 2 v 2 )

A = ( u 1 u 2 v 1 v 2 ) ( λ 1 0 0 λ 2 ) ( u 1 u 2 v 1 v 2 ) − 1 . \begin{align*}

A =

\left(\begin{matrix}u_1 & u_2\\v_1 & v_2\\\end{matrix}\right)

\left(\begin{matrix}\lambda_1 & 0\\0 & \lambda_2\\\end{matrix}\right)

\left(\begin{matrix}u_1 & u_2\\v_1 & v_2\\\end{matrix}\right)^{-1}.

\end{align*} A = ( u 1 v 1 u 2 v 2 ) ( λ 1 0 0 λ 2 ) ( u 1 v 1 u 2 v 2 ) − 1 . This is like a scaling surrounded by rotations and separates how much effect the matrix has ( λ i ) \;(\lambda_i)\; ( λ i ) ( v i ) . \;(\textbf{v}_i). ( v i ) .

For example

A = ( − 2 − 2 1 − 5 ) \displaystyle A=\left(\begin{matrix}-2&-2\\ 1&-5\\\end{matrix}\right) A = ( − 2 1 − 2 − 5 )

Copy A = np . array ( [ [ - 2 , - 2 ] , [ 1 , - 5 ] ] )

w , v = np . linalg . eig ( A )

inv_v = np . linalg . inv ( v )

np . matmul ( np . matmul ( v , np . diag ( w ) ) , inv_v )

Copy array([[-2., -2.],

[ 1., -5.]])

Orthogonal eigenvectors

If x 1 \;\textbf{x}_1\; x 1 x 2 \;\textbf{x}_2\; x 2 orthogonal vectors, then the scalar/dot product is zero.

x 1 . x 2 = 0 \displaystyle \textbf{x}_1.\textbf{x}_2=0 x 1 . x 2 = 0

x 1 . x 2 = ( 2 − 1 3 ) . ( 2 7 1 ) = 2 × 2 + ( − 1 × 7 ) + ( 3 × 1 ) = 4 − 7 + 3 = 0. \displaystyle \textbf{x}_1.\textbf{x}_2=\left(\begin{matrix}2\\ -1\\ 3\end{matrix}\right).\left(\begin{matrix}2\\ 7\\ 1\end{matrix}\right)=2\times 2+(-1\times 7)+(3\times1)=4-7+3=0. x 1 . x 2 = 2 − 1 3 . 2 7 1 = 2 × 2 + ( − 1 × 7 ) + ( 3 × 1 ) = 4 − 7 + 3 = 0.

Since this dot product is zero, the vectors x 1 \textbf{x}_1 x 1 x 2 \textbf{x}_2 x 2

Symmetric matricies

Symmetric matricies have orthogonal eigenvectors, e.g.

A = ( 19 20 − 16 20 13 4 − 16 4 31 ) \displaystyle A=\left(\begin{matrix}19&20&-16\\ 20&13&4 \\ -16&4&31\\\end{matrix}\right) A = 19 20 − 16 20 13 4 − 16 4 31

Copy A = np . array ( [ [ 19 , 20 , - 16 ] , [ 20 , 13 , 4 ] , [ - 16 , 4 , 31 ] ] )

w , v = np . linalg . eig ( A )

print ( v )

print ( '\ndot products of eigenvectors:\n' )

print ( np . dot ( v [ : , 0 ] , v [ : , 1 ] ) )

print ( np . dot ( v [ : , 0 ] , v [ : , 2 ] ) )

print ( np . dot ( v [ : , 1 ] , v [ : , 2 ] ) )

Copy [[ 0.66666667 -0.66666667 0.33333333]

[-0.66666667 -0.33333333 0.66666667]

[ 0.33333333 0.66666667 0.66666667]]

dot products of eigenvectors:

-1.942890293094024e-16

1.3877787807814457e-16

1.1102230246251565e-16

Normalised eigenvectors

( x y z ) , \displaystyle \left(\begin{matrix}x\\ y\\ z\\\end{matrix}\right), x y z ,

( x x 2 + y 2 + z 2 y x 2 + y 2 + z 2 z x 2 + y 2 + z 2 ) \displaystyle \left(\begin{matrix}\frac{x}{\sqrt{x^2+y^2+z^2}}\\ \frac{y}{\sqrt{x^2+y^2+z^2} }\\ \frac{z}{\sqrt{x^2+y^2+z^2} }\end{matrix}\right) x 2 + y 2 + z 2 x x 2 + y 2 + z 2 y x 2 + y 2 + z 2 z

is the corresponding normalised vector: a vector of unit length (magnitude).

Orthogonal matrices

A matrix is orthogonal if its columns are normalised orthogonal vectors.

One can prove that if M \;M\; M

M T M = I M T = M − 1 \displaystyle M^TM=I\qquad M^T=M^{-1} M T M = I M T = M − 1

Note that if the eigenvectors are written in orthogonal form then the diagonalising equation

is simplified:

A = ( u 1 u 2 v 1 v 2 ) ( λ 1 0 0 λ 2 ) ( u 1 u 2 v 1 v 2 ) − 1 = ( u 1 u 2 v 1 v 2 ) ( λ 1 0 0 λ 2 ) ( u 1 v 1 u 2 v 2 ) . \begin{align*}

A

&=

\left(\begin{matrix}u_1 & u_2\\v_1 & v_2\\\end{matrix}\right)

\left(\begin{matrix}\lambda_1 & 0\\0 & \lambda_2\\\end{matrix}\right)

\left(\begin{matrix}u_1 & u_2\\v_1 & v_2\\\end{matrix}\right)^{-1}

\\

&=

\left(\begin{matrix}u_1 & u_2\\v_1 & v_2\\\end{matrix}\right)

\left(\begin{matrix}\lambda_1 & 0\\0 & \lambda_2\\\end{matrix}\right)

\left(\begin{matrix}u_1 & v_1\\u_2 & v_2\\\end{matrix}\right).

\end{align*} A = ( u 1 v 1 u 2 v 2 ) ( λ 1 0 0 λ 2 ) ( u 1 v 1 u 2 v 2 ) − 1 = ( u 1 v 1 u 2 v 2 ) ( λ 1 0 0 λ 2 ) ( u 1 u 2 v 1 v 2 ) . Summary

Matrix representation of simultaneous equations

Matrix-vector and matrix-matrix multiplication

Determinant, inverse and transpose

Null space of singular matrices

Finding eigenvalues and eigenvectors

Python for solving systems, finding inverse, null space and eigenvalues/vectors

Diagonalising matrices (we will use this for systems of differential equations)

Introductory problems

Introductory problems 1

A = ( 1 0 0 i ) ; \displaystyle A = \left(\begin{array}{cc} 1 & 0 \\ 0 & i \end{array}\right); A = ( 1 0 0 i ) ; B = ( 0 i i 0 ) ; \displaystyle B = \left(\begin{array}{cc} 0 & i \\ i & 0 \end{array}\right); B = ( 0 i i 0 ) ; C = ( 1 2 1 2 ( 1 − i ) 1 2 ( 1 + i ) − 1 2 ) ; \displaystyle C = \left(\begin{array}{cc} \frac{1}{\sqrt{2}} & \frac{1}{2}\left(1-i\right) \\\frac{1}{2}\left(1+i\right) & -\frac{1}{\sqrt{2}} \end{array}\right); C = ( 2 1 2 1 ( 1 + i ) 2 1 ( 1 − i ) − 2 1 ) ;

verify by hand, and using the numpy.linalg module, that

A A − 1 = A − 1 A = I AA^{-1}=A^{-1}A =I A A − 1 = A − 1 A = I

B B − 1 = B − 1 B = I BB^{-1}=B^{-1}B =I B B − 1 = B − 1 B = I

C C − 1 = C − 1 C = I CC^{-1}=C^{-1}C =I C C − 1 = C − 1 C = I

Copy # hint

import numpy as np

# In Python the imaginary unit is "1j"

A = np . array ( [ [ 1 , 0 ] , [ 0 , 1j ] ] )

print ( A * np . linalg . inv ( A ) )

Introductory problems 2 Let A be an n × n n \times n n × n I I I n × n n\times n n × n B B B n × n n\times n n × n

Suppose that A B A − 1 = I ABA^{-1}=I A B A − 1 = I B B B A A A

Main problems

Main problems 1 Let A A A

− x 1 − 2 x 2 = 1 , 2 x 1 + 3 x 2 = − 1. \begin{aligned}

-x_1 - 2 x_2 &= 1,\\

2 x_1 + 3 x_2 &= -1.

\end{aligned} − x 1 − 2 x 2 2 x 1 + 3 x 2 = 1 , = − 1.

Solve the system by finding the inverse matrix A − 1 A^{-1} A − 1

Let x = ( x 1 x 2 ) \displaystyle \mathbf{x} = \left(\begin{array}{cc} x_1 \\ x_2 \end{array}\right) x = ( x 1 x 2 )

Calculate and simplify A 2017 x A^{2017} \mathbf{x} A 2017 x

Main problems 2 For each of the following matrices

A = ( 2 3 1 4 ) ; \displaystyle A = \left(\begin{array}{cc} 2 & 3 \\ 1 & 4 \end{array}\right); A = ( 2 1 3 4 ) ; B = ( 4 2 6 8 ) ; \displaystyle B = \left(\begin{array}{cc} 4 & 2 \\ 6 & 8 \end{array}\right); B = ( 4 6 2 8 ) ; C = ( 1 4 1 1 ) ; \displaystyle C = \left(\begin{array}{cc} 1 & 4 \\ 1 & 1 \end{array}\right); C = ( 1 1 4 1 ) ; D = ( x 0 0 y ) , \displaystyle D = \left(\begin{array}{cc} x & 0 \\ 0 & y \end{array}\right), D = ( x 0 0 y ) ,

compute the determinant, eigenvalues and eigenvectors by hand.

Check your results by verifying that Q x = λ i x Q\mathbf{x} = \lambda_i \mathbf{x} Q x = λ i x Q = A Q=A Q = A B B B C C C D D D numpy.linalg module.

Copy # hint

import numpy as np

A = np . array ( [ [ 2 , 3 ] , [ 1 , 4 ] ] )

e_vals , e_vecs = np . linalg . eig ( A )

print ( e_vals )

print ( e_vecs )

Main problems 3 Two vectors x 1 {\bf x_1} x 1 x 2 {\bf x_2} x 2 orthogonal if their dot/scalar product is zero:

x 1 . x 2 = ( 2 − 1 3 ) . ( 2 7 1 ) = ( 2 × 2 ) + ( − 1 × 7 ) + ( 3 × 1 ) = 4 − 7 + 3 = 0 , \mathbf{x_1}.\mathbf{x_2}=\left(\begin{array}{c} 2 \\ -1 \\ 3 \\ \end{array} \right).\left(\begin{array}{c} 2 \\ 7 \\ 1 \\ \end{array} \right)=(2{\times}2)+(-1{\times}7)+(3{\times}1)=4-7+3=0, x 1 . x 2 = 2 − 1 3 . 2 7 1 = ( 2 × 2 ) + ( − 1 × 7 ) + ( 3 × 1 ) = 4 − 7 + 3 = 0 ,

thus x 1 \mathbf{x_1} x 1 x 2 \mathbf{x_2} x 2

Find vectors that are orthogonal to ( 1 2 ) and ( 1 2 − 1 ) . \displaystyle \left({\begin{array}{c} 1 \\ 2 \\ \end{array} } \right) \text{and} \left({\begin{array}{c} 1 \\ 2 \\ -1 \\ \end{array} } \right). ( 1 2 ) and 1 2 − 1 .

How many such vectors are there?

Main problems 4 Diagonalize the 2 × 2 2 \times 2 2 × 2 A = ( 2 − 1 − 1 2 ) \displaystyle A=\left(\begin{array}{cc} 2 & -1 \\ -1 & 2 \\ \end{array} \right) A = ( 2 − 1 − 1 2 ) S S S D D D A = S D S − 1 \displaystyle A = SDS^{-1} A = S D S − 1

Main problems 5 Find a 2 × 2 2{\times}2 2 × 2 A A A A ( 2 1 ) = ( − 1 4 ) and A ( 5 3 ) = ( 0 2 ) \displaystyle \quad A\left(\begin{array}{c} 2 \\ 1 \end{array} \right) = \left(\begin{array}{c} -1 \\ 4 \end{array} \right)\quad\text{and}\quad A \left(\begin{array}{c} 5 \\ 3\end{array} \right)=\left(\begin{array}{c} 0 \\ 2\end{array} \right) A ( 2 1 ) = ( − 1 4 ) and A ( 5 3 ) = ( 0 2 )

Extension problems

Extension problems 1 If there exists a matrix M M M M T M = I M^TM=I M T M = I M T = M − 1 M^T=M^{-1} M T = M − 1